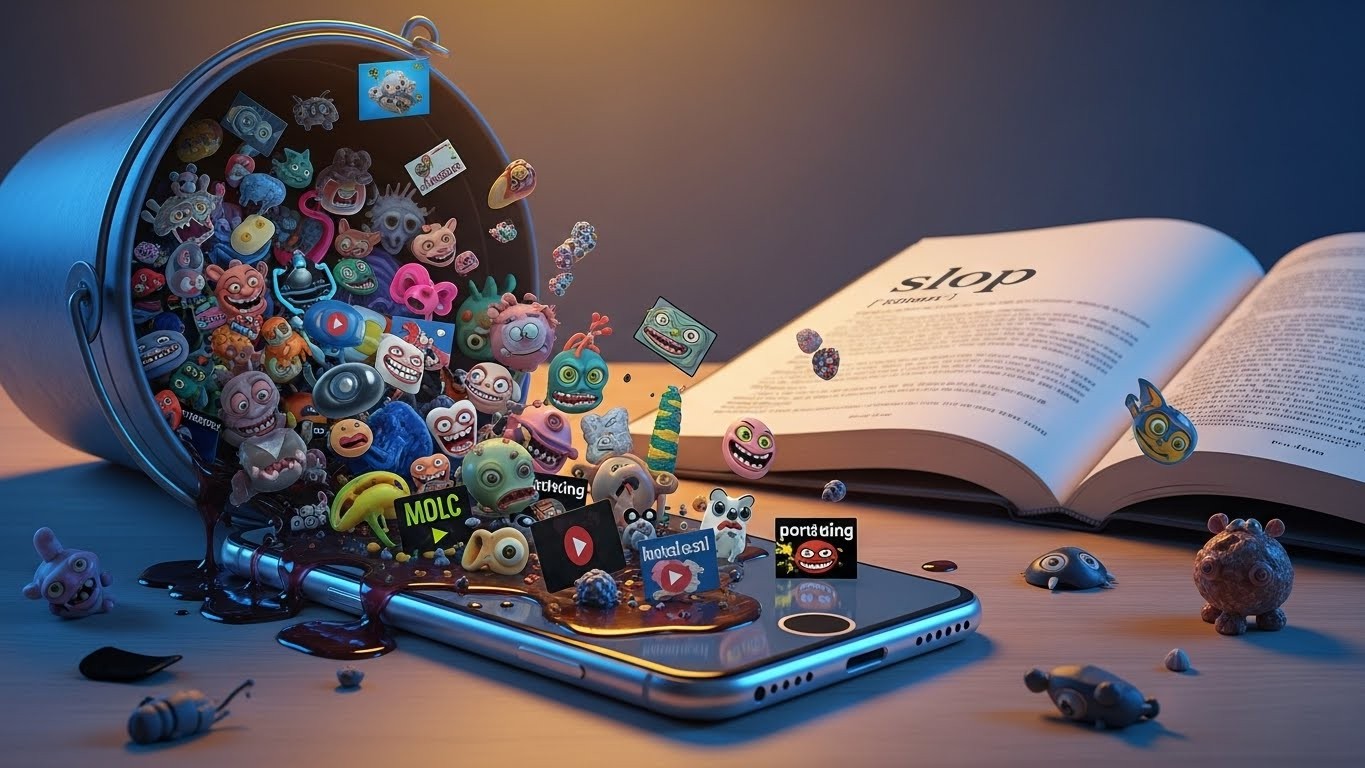

Ever scroll through your feed and suddenly stumble on something that looks… off? Like a video of a creature morphing in ways that make no sense, or an image that’s just a little too weird to be real? I’ve had those moments more often lately, and it turns out I’m not alone. There’s a growing unease about the sheer volume of strange, low-quality stuff popping up online, much of it whipped up by artificial intelligence in seconds.

That unease has now gotten official recognition. A major dictionary has named “slop” its word of the year for 2025, directly tying it to the surge in AI-created content that’s flooding our digital spaces. It’s a term that’s evolved from something mundane—like leftover food for animals—to a sharp critique of what’s happening on our screens.

Why “Slop” Captured the Moment

In my view, this choice feels spot on. We’ve all seen the hype around generative AI tools over the past few years, promising to revolutionize creativity and productivity. But now, the flip side is becoming impossible to ignore. “Slop” perfectly encapsulates that disappointing output: material churned out in massive quantities, often lacking depth, accuracy, or real value.

Think about it. What started as impressive demos has turned into an endless stream of mediocre images, videos, and even music. It’s not just a few oddities here and there; it’s everywhere. And perhaps the most interesting aspect is how quickly public sentiment has shifted from awe to wariness.

The New Definition in Context

The updated entry describes it clearly: digital content that’s low quality, produced in bulk using artificial intelligence. Simple, yet it hits the nail on the head. Previously, the word evoked something unappetizing or worthless, like waste products. Now, it’s squarely aimed at the byproducts of AI systems that prioritize speed and volume over substance.

I’ve found that this redefinition resonates because it gives us language for something we’ve been feeling intuitively. When you encounter a post that seems generated just to grab attention—full of errors or bizarre elements—calling it “slop” feels right. It’s dismissive in a way that matches the content’s lack of effort.

Low-quality digital content produced rapidly by AI isn’t just clutter—it’s reshaping how we interact with information online.

That shift in meaning didn’t happen in a vacuum. It’s a direct response to real-world trends that have accelerated dramatically.

The Flood of AI Content on Social Platforms

Social media has become ground zero for this phenomenon. Platforms are increasingly filled with AI-made videos and images designed to go viral, regardless of quality. One particularly odd example involved a strange creature transforming in impossible ways inside a crowded public space—racking up hundreds of millions of views.

These aren’t isolated incidents. New features and dedicated feeds for generated videos have rolled out, encouraging even more creation. Meanwhile, tools for making sophisticated video from text prompts have hit the market, lowering the barrier further. The result? A constant influx that can be hard to distinguish from human-made work at first glance.

What bothers me most is how engagement drives it all. As long as these posts get likes, shares, and views, they generate revenue. Quality takes a back seat. It’s a cycle that’s tough to break in an attention economy.

- Bizarre morphing creatures in everyday settings

- Endless variations of trending memes with slight AI twists

- Images that look almost realistic but fall into the uncanny valley

- Videos scripted entirely by algorithms, lacking coherent storytelling

These examples illustrate just how pervasive it’s become. And it’s not limited to visuals.

AI Slop Invading Music and Audio

Music streaming services have faced their own battles. Reports emerged of tens of millions of tracks being removed because they were deemed spammy and AI-generated. Policies had to be created on the fly to address impersonation and low-effort uploads.

One case stood out: a project that gained a massive listener base without initially disclosing its synthetic origins. Only after backlash did clarity come in the form of an updated description. It raised tough questions about transparency and artist protection in an era where anyone—or any bot—can produce tracks instantly.

In my experience following tech trends, this highlights a broader tension. Generative tools democratize creation, which sounds great in theory. But when quantity overwhelms quality, it devalues genuine artistry. Listeners end up sifting through noise to find signal.

Shifting Public Attitudes Toward AI Tools

Recent surveys paint an interesting picture. Usage of popular AI platforms has dipped slightly in recent months. Fewer people report turning to them regularly compared to earlier in the year. It’s a small decline, but telling nonetheless.

Why the pullback? I suspect it’s a mix of fatigue and disappointment. Early excitement gave way to realizing the limitations—and the glut of subpar output those tools enable. When everything starts feeling generic or error-prone, the novelty wears off.

There’s also the misinformation angle. Low-quality generated content can spread false narratives just as easily as entertaining oddities. In a world already grappling with trust issues online, this adds fuel to the fire.

As AI becomes more ubiquitous, discernment becomes our most valuable skill.

What Makes Content Feel Like Slop?

Let’s break it down a bit. Not all AI output qualifies, of course. Thoughtful uses—like assisting with brainstorming or editing—can be valuable. The problem arises with mass production aimed at gaming algorithms rather than informing or entertaining meaningfully.

Common hallmarks include:

- Repetitive patterns that feel formulaic

- Subtle inaccuracies or hallucinations in text

- Visual artifacts, like extra fingers or warped proportions

- Lack of emotional depth or original insight

- Pure volume over craftsmanship

Spotting these has become a new kind of digital literacy. And honestly, it’s exhausting sometimes. Wouldn’t it be nicer if we could trust more of what we see?

Yet, there’s an upside. This awareness might push developers toward better safeguards and higher standards. Maybe we’ll see more emphasis on quality controls or watermarking to identify origins.

The Bigger Picture: Where Do We Go From Here?

Declaring “slop” word of the year isn’t just linguistic trivia. It’s a cultural marker. It signals that society is grappling with AI’s double-edged sword: incredible potential paired with real downsides.

In the coming years, I expect debates to intensify around regulation, ethics, and platform responsibility. How do we encourage innovation without drowning in mediocrity? Can we foster tools that prioritize human-like care in their outputs?

One hopeful sign is the growing call for transparency. When creators disclose AI assistance, it builds trust. When platforms curb incentivizing pure engagement bait, it levels the field.

Personally, I’m optimistic that we’ll adapt. Humans have always navigated technological shifts, from printing presses to television. This feels similar—a messy transition period before norms settle.

But adaptation requires vigilance. As consumers, curating our feeds and supporting quality creators matters. As users of AI, thinking critically about what we generate and share makes a difference.

Looking back, 2025 might be remembered as the year we named the problem. Recognizing “slop” is the first step toward demanding better. The question is: will the tech world rise to the challenge, or will the flood continue unchecked?

I’ve been pondering this a lot lately. On one hand, generative AI opens doors for people without traditional skills to express themselves. On the other, unchecked proliferation risks turning the internet into an echo chamber of noise.

Balance seems key. Tools that assist rather than automate entirely. Platforms that reward depth over virality. Users who value authenticity.

Perhaps the backlash against slop will spark a renaissance of sorts—more appreciation for human-crafted work. Time will tell. For now, having a word to describe the issue feels like progress in itself.

What do you think? Are you seeing more of this low-quality content in your daily scroll? Has it changed how you use AI tools? The conversation is just beginning, and it’s one worth having.

In the end, language evolves with us. Choosing “slop” reflects where we are: excited about technology’s possibilities, but increasingly cautious about its pitfalls. It’s a reminder that progress isn’t linear, and critical thinking remains essential.

As we head further into this AI-driven era, staying aware—and a little skeptical—might be our best defense against the slop.