Have you ever paused mid-chat with an AI to wonder what’s powering those clever responses? It’s not just code and servers—it’s a massive amount of electricity, and the numbers are climbing fast. The latest AI models, like the newest iterations of large language models, are pushing the boundaries of innovation but at a cost that’s rarely discussed. I’ve always been fascinated by how technology shapes our world, but the energy appetite of these systems is starting to feel like a plot twist we didn’t see coming.

The Rising Energy Demands of AI

Artificial intelligence is no longer just a sci-fi dream—it’s a daily reality. From virtual assistants to content generators, AI is woven into our lives. But behind the scenes, the energy consumption of these models is skyrocketing. The newest AI systems are estimated to use significantly more power than their predecessors, with some experts suggesting a jump of up to 20 times the energy of earlier versions. That’s not just a small uptick; it’s a seismic shift.

Why does this matter? Well, for one, it’s hitting our wallets. As tech giants build massive data centers to fuel their AI ambitions, the demand for electricity is driving up costs for everyday consumers. It’s like we’re all subsidizing the AI revolution without even realizing it. And then there’s the environmental angle—more energy means a bigger carbon footprint, which is a tough pill to swallow in the midst of a climate crisis.

How Much Energy Are We Talking About?

Pinpointing the exact energy usage of the latest AI models is tricky because, frankly, the industry isn’t very forthcoming. There’s no rule forcing tech companies to spill the beans on their energy stats, so most don’t. Imagine buying a car without knowing its fuel efficiency—that’s the kind of opacity we’re dealing with here. Some researchers have stepped in to fill the gap, and their findings are eye-opening.

For example, a single query to an advanced AI model in 2023 might have used around 2 watt-hours of electricity. Fast forward to today, and estimates suggest that the newest models can burn through up to 40 watt-hours for a medium-length response. To put that in perspective, that’s enough energy to charge your smartphone several times over. On average, these models are clocking in at around 18 watt-hours per response, which is still a massive leap from earlier versions.

It’s mind-boggling that we use these tools daily without any clue about their efficiency or emissions. This should be a priority for regulators.

– AI and climate researcher

The Transparency Problem

Here’s where things get frustrating. Unlike cars or appliances, AI models come with no energy efficiency labels. Over 80% of large language model traffic happens on systems with zero environmental disclosures. It’s like flying blind in a storm. Researchers are practically begging for more openness, arguing that understanding AI’s environmental impact is critical to making informed decisions about its future.

I’ll admit, it’s a bit unsettling to think we’re using tools that could be quietly draining resources while we’re none the wiser. Perhaps the most alarming part is how this lack of transparency lets companies sidestep accountability. If we don’t know the numbers, how can we push for greener solutions?

Why AI Models Are So Power-Hungry

So, what’s driving this energy surge? It comes down to complexity. Newer AI models are designed to handle more intricate tasks, like long-form reasoning or generating detailed content. This requires more computational power, both during training (when the model learns) and inference (when it responds to you). Think of it like upgrading from a bicycle to a monster truck—both get you places, but one guzzles a lot more fuel.

Training an AI model is particularly energy-intensive. It involves running massive datasets through complex algorithms, often for weeks or months, on specialized hardware. Once trained, every query still demands significant power to process. The more advanced the model, the hungrier it gets. It’s a bit like feeding a dragon—you need a lot of resources to keep it going.

The Consumer Cost

Here’s where it hits home. As tech companies ramp up their AI operations, they’re building sprawling data centers that consume enormous amounts of electricity. This isn’t just a tech problem—it’s a societal one. Higher energy demands are pushing up electricity rates, and guess who’s footing the bill? You and me.

Experts warn that residential utility customers are essentially subsidizing these corporate giants. It’s a bit like paying for someone else’s lavish party. In some regions, electricity rates are already climbing as data centers multiply. This transfer of wealth from everyday people to big tech is raising eyebrows, and it’s not hard to see why.

| AI Model | Estimated Energy per Query (Watt-Hours) | Compared to Earlier Models |

| Early AI (2023) | 2 | Baseline |

| Advanced Model (2025) | 18-40 | Up to 20x |

| High-End Reasoning Model | 40+ | Highest |

Environmental Impacts: A Growing Concern

Beyond the financial hit, there’s a bigger issue at play: the environment. The tech industry’s growing energy demands are putting pressure on global efforts to combat climate change. Data centers already account for a significant chunk of global electricity use, and AI is only adding to that load. Some companies have scaled back their climate pledges, prioritizing AI development over sustainability. It’s a tough trade-off.

I can’t help but wonder: are we trading short-term tech gains for long-term planetary pain? The lack of clear data makes it hard to know the full scope, but the signs aren’t great. If AI’s energy use keeps climbing unchecked, we could be looking at a serious environmental setback.

What Can Be Done?

So, where do we go from here? The good news is that solutions exist, but they require action. Here are a few steps that could make a difference:

- Push for Transparency: Regulators could mandate energy and emissions reporting for AI models, giving consumers and policymakers the data they need.

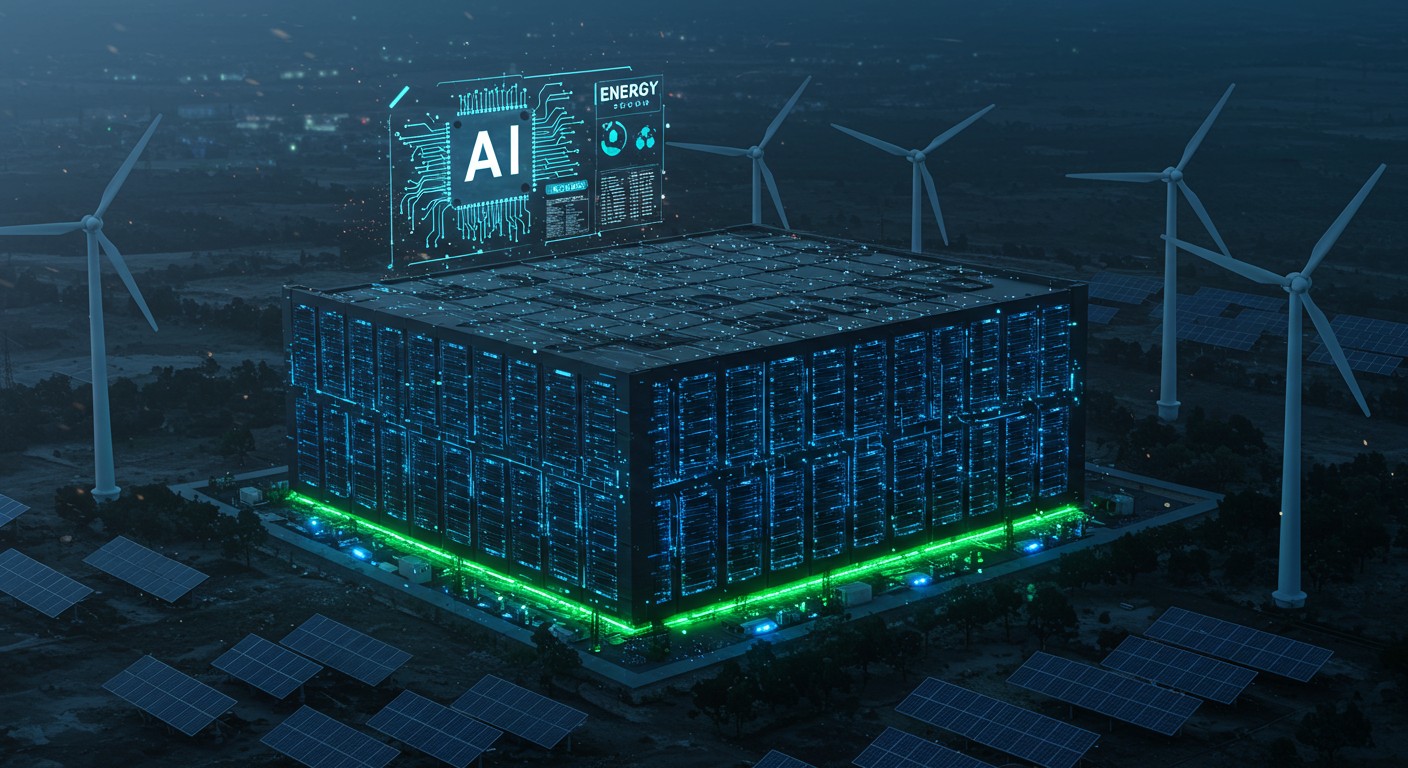

- Invest in Green Tech: Tech companies could prioritize renewable energy sources for their data centers, reducing their carbon footprint.

- Optimize AI Design: Developers can work on creating more energy-efficient models without sacrificing performance.

- Consumer Awareness: We can all demand more accountability from the tech we use, pushing for sustainability as a priority.

It’s not all doom and gloom. Some companies are already experimenting with green AI, designing models that use less power or run on renewable energy. But it’s going to take a collective effort—governments, companies, and users—to turn the tide.

The Bigger Picture

AI is transforming our world, but at what cost? The energy demands of these systems are a wake-up call, reminding us that innovation doesn’t come free. As someone who loves tech, I find it both exciting and a bit unnerving to see how fast things are moving. The challenge now is balancing progress with responsibility.

We’re at a crossroads. Will we let AI’s energy hunger run wild, or will we demand smarter, greener solutions? I’m betting on the latter, but it’s going to take work. Next time you fire up an AI tool, maybe take a second to think about the invisible costs—and what we can do to keep them in check.

This isn’t just about numbers or tech specs; it’s about the kind of future we want. AI has the power to solve incredible problems, but only if we manage its impact wisely. So, what’s your take? Are we ready to tackle the energy challenge, or are we too caught up in the AI hype to notice?