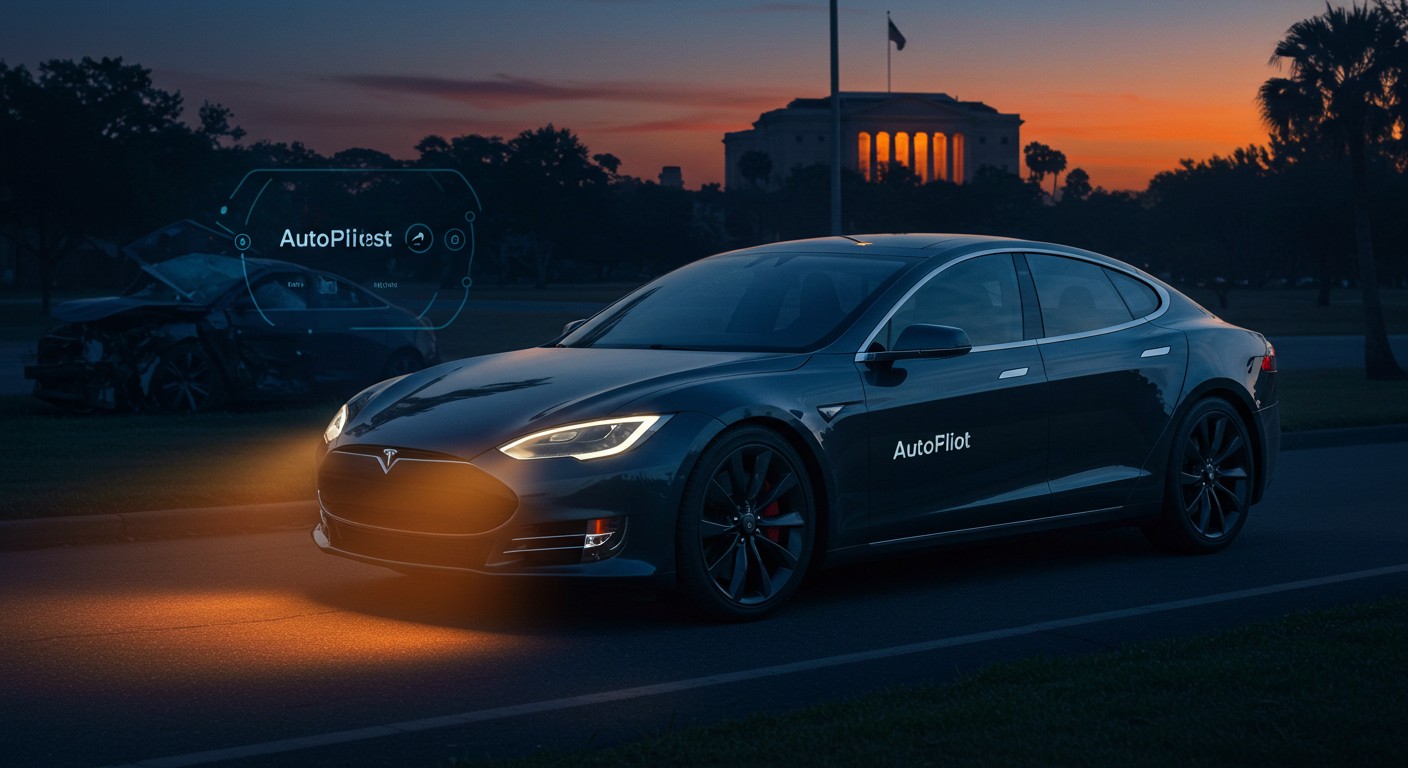

Have you ever wondered how much trust we place in technology, especially when it’s behind the wheel of a car? In 2019, a devastating crash in Florida involving Tesla’s Autopilot system left one person dead and another severely injured, sparking a fierce legal battle that’s now demanding a staggering $345 million in damages. This case isn’t just about money—it’s about who bears responsibility when cutting-edge tech fails to deliver on its promises.

The Fatal Crash That Shook Tesla’s Autopilot Claims

The incident took place in Key Largo, Florida, where a Tesla Model S driver relied on the company’s Enhanced Autopilot, a system designed to assist with steering, braking, and acceleration. What unfolded was a tragedy that raised uncomfortable questions about the limits of self-driving technology and the way it’s marketed to the public. As the driver accelerated through an intersection, disaster struck, leaving a young woman dead and her partner grappling with life-altering injuries.

I’ve always been fascinated by how technology can transform our lives, but this case makes me pause. Can we really hand over control to a machine and expect flawless results? The plaintiffs in this lawsuit argue that Tesla’s bold claims about Autopilot’s capabilities misled drivers, while the company insists human error was to blame. Let’s dive into the details.

What Happened on That Fateful Day?

In the quiet coastal town of Key Largo, a Tesla Model S sped through an intersection at over 60 miles per hour. The driver, distracted by a dropped phone, trusted Autopilot to handle the road ahead. Unfortunately, the system didn’t detect an obstacle—a parked car with two people standing nearby. The collision was catastrophic.

The crash was so severe that one victim was found 75 feet from the point of impact.

– Court testimony

The young woman, just 22 years old, lost her life instantly. Her partner survived but faced a grueling recovery from broken bones, a traumatic brain injury, and deep emotional scars. The lawsuit, filed by the woman’s family and her injured partner, seeks $109 million in compensatory damages for medical costs, pain, and suffering, plus $236 million in punitive damages to hold Tesla accountable.

It’s heartbreaking to think about the lives shattered in a split second. But what’s equally unsettling is the question of who—or what—was responsible. Was it the driver’s distraction, or did Tesla’s technology fall short?

Tesla’s Defense: Driver Error or Tech Triumph?

Tesla’s legal team has been adamant that the driver’s actions caused the crash. They argue he misused Autopilot by diverting his attention from the road, ignoring the system’s warnings to keep his hands on the wheel. In court, Tesla emphasized that Autopilot is a driver-assistance tool, not a fully autonomous system, and that proper use could have prevented the tragedy.

Here’s where things get murky. Tesla’s marketing has long touted Autopilot as a game-changer, with promises of safer roads and near-self-driving capabilities. Yet, the fine print tells a different story, urging drivers to stay vigilant. To me, this feels like a contradiction—how can you sell a dream of effortless driving while expecting users to treat it like a standard car?

- Driver responsibility: Tesla says the driver ignored warnings and was distracted.

- System limitations: Autopilot isn’t designed for every road or scenario.

- Clear communication: Tesla claims it informs users about proper use.

During the trial, Tesla’s lawyers painted the company as a pioneer working to save lives through innovation. They argued that a verdict against them could stifle progress in autonomous driving. But is that a fair defense when lives are at stake?

Plaintiffs’ Case: Was Autopilot Dangerously Flawed?

The plaintiffs’ attorneys took a different stance, arguing that Tesla knew Autopilot had serious flaws but failed to address them. They pointed to instances where the system struggled to detect obstacles, especially in complex environments like intersections. More damningly, they accused Tesla of exaggerating Autopilot’s abilities, creating a false sense of security for drivers.

Think about it: if a company tells you their car can practically drive itself, wouldn’t you feel tempted to relax a bit? The plaintiffs’ team leaned heavily on this, citing public statements from Tesla’s leadership that hyped up Autopilot’s potential. They argued that this misleading marketing encouraged risky behavior, setting the stage for disaster.

Tesla’s promises about Autopilot gave drivers a dangerous overconfidence.

– Plaintiffs’ attorney

Expert witnesses for the plaintiffs also testified about technical shortcomings, like Autopilot’s difficulty recognizing stationary objects. They claimed Tesla could have restricted the system’s use to safer roads but chose not to, prioritizing profits over safety.

The Bigger Picture: Trust in Technology

This lawsuit isn’t just about one tragic crash—it’s a wake-up call about our relationship with self-driving tech. As cars become smarter, we’re forced to grapple with tough questions. How much control should we surrender to machines? And when things go wrong, who pays the price?

In my view, the real issue here is trust. Tesla’s bold vision of a driverless future is inspiring, but it hinges on flawless execution. If drivers can’t rely on Autopilot to handle basic obstacles, or if they’re misled about its capabilities, that trust erodes fast.

| Issue | Tesla’s Claim | Plaintiffs’ Argument |

| Driver Behavior | Driver was distracted and misused Autopilot | Driver trusted Tesla’s misleading claims |

| Tech Flaws | Autopilot is a driver-assist tool, not autonomous | System had known defects Tesla ignored |

| Marketing | Clear instructions provided to users | Exaggerated claims encouraged overreliance |

The table above sums up the core conflict. Both sides have compelling points, but the jury’s decision could set a precedent for how we regulate and trust autonomous vehicles.

The Human Cost of Innovation

Beyond the legal arguments, this case is a stark reminder of the human toll when technology falls short. The young woman’s family is left grieving a life cut short, while her partner faces a long road to recovery. No amount of money can undo that pain, but the lawsuit seeks to ensure accountability.

I can’t help but feel for the victims’ loved ones, who sat through the trial reliving that horrific day. Every time crash footage or photos appeared in court, they looked away, unable to bear the reminder. It’s a sobering contrast to the abstract debates about tech and progress.

- Compensatory damages: Cover medical bills, pain, and suffering.

- Punitive damages: Punish Tesla for alleged recklessness.

- Wrongful death claim: Seek justice for the victim’s family.

The $345 million demand reflects the plaintiffs’ belief that Tesla’s actions—or inaction—warrant serious consequences. Whether the jury agrees remains to be seen.

What’s at Stake for Tesla and Beyond?

This trial is a pivotal moment for Tesla. A ruling against them could lead to stricter scrutiny of Autopilot and similar systems, potentially slowing the race toward fully autonomous cars. It might also embolden other plaintiffs to come forward, as Tesla has faced multiple lawsuits over Autopilot-related incidents.

But the implications go beyond one company. The outcome could shape how we define responsibility in an era of smart vehicles. Should manufacturers bear the brunt when their tech fails, or should drivers always remain accountable? It’s a question that’ll linger long after the verdict.

A verdict against Tesla could send shockwaves through the self-driving industry.

– Automotive safety expert

Personally, I think the truth lies somewhere in the middle. Drivers need to stay engaged, but companies like Tesla have a duty to ensure their tech is as safe as advertised. Anything less is a betrayal of public trust.

Looking Ahead: Safer Roads or More Risks?

As we await the jury’s decision, one thing is clear: the road to autonomous driving is fraught with challenges. Tesla’s vision of a world where cars drive themselves is tantalizing, but cases like this remind us that we’re not there yet. Safety must come first, and that starts with transparency and accountability.

Perhaps the most sobering lesson is that technology, no matter how advanced, can’t replace human judgment entirely. Until we bridge that gap, we’ll need to tread carefully, balancing innovation with responsibility. What do you think—can we trust self-driving cars, or are we moving too fast?

This Florida lawsuit is more than a courtroom drama—it’s a glimpse into the future of transportation and the risks we’re willing to take. As the world watches, the verdict could redefine how we navigate the line between human control and machine precision.